How AI Is A Sign Of Collapse

Artificial Intelligence, self-checkout, electric cars, reusable rockets. Modern 'innovation' doesn't actually create anything new (nouns), it just adds more complication to existing technology (adjectives). Modern civilization is just getting more and more complicated, which only makes it more fragile. Indeed, we are witnessing precisely what Joseph Tainter described in his book, The Collapse Of Complex Societies. Joe said:

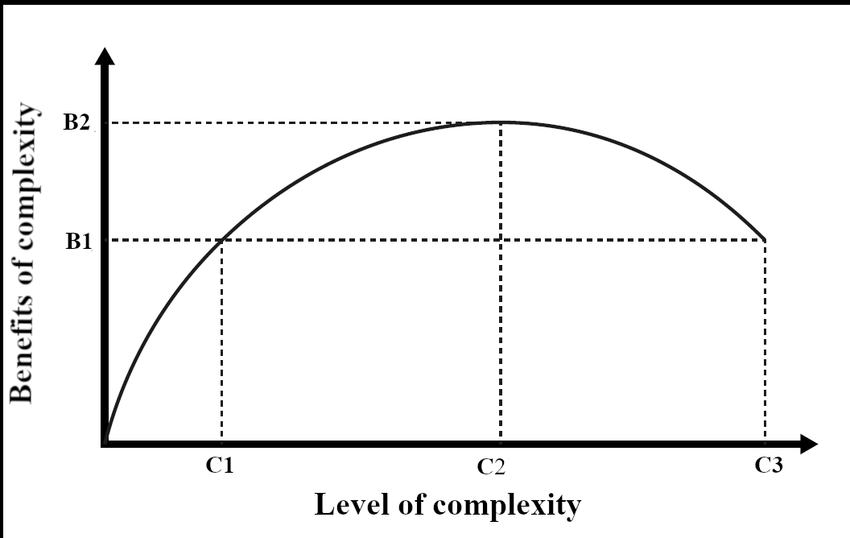

It is the thesis of this chapter that return on investment in complexity varies, and that this variation follows a characteristic curve. More specifically, it is proposed that, in many crucial spheres, continued investment in sociopolitical complexity reaches a point where the benefits for such investment begin to decline, at first gradually, then with accelerated force. Thus, not only must a population allocate greater and greater amounts of resources to maintaining an evolving society, but after a certain point, higher amounts of this investment will yield smaller increments of return. Diminishing returns, it will be shown, are a recurrent aspect of sociopolitical evolution, and of investment in complexity. The principle of diminishing returns is one of the few phenomena of such regularity and predictability that economists are willing to call it a 'law' (Hadar 1966: 30).

In addition to economics, one can also observe this phenomenon in their own lives or bodies. Every sensation fades and requires more and more stimulus to feel, you can observe this from taking ecstasy or through Buddhist meditation. It is even akin to the second law of thermodynamics, wherein every state naturally trends towards entropy unless you apply more and more energy to fight the arrow of time. You can find this phenomenon everywhere, including behind your own eyeballs, but let's just open our eyes to the few examples I list above. Actually, just one example because my son is waking up.

Artificial Intelligence

Applying the adjective 'artificial' to what we vainly call intelligence just uses massive energy consumption and complexity to create village idiots. To hear it from the beast's mouth, this is Jim Covello of Goldman Sachs:

My main concern is that the substantial cost to develop and run AI technology means that AI applications must solve extremely complex and important problems for enterprises to earn an appropriate return on investment (ROI). We estimate that the AI infrastructure buildout will cost over $1tn in the next several years alone, which includes spending on data centers, utilities, and applications. So, the crucial question is: What $1tn problem will AI solve? Replacing low-wage jobs with tremendously costly technology is basically the polar opposite of the prior technology transitions I’ve witnessed.

Seriously, what big problems does AI solve? Producing massive amounts of self-confident bullshit? We already have Americans. Producing kill lists? We already have 'Israelis'. Drawing pictures? We've been doing this since the cave man days. 'Optimizing', finding 'efficiencies', these are all literally marginal gains, achieved with massive complexity (and piss-poor reliability), precisely what Tainter described in his thesis. Generative AI burns a rainforest to produce a spark. As Joe said:

More complex societies are more costly to maintain than simpler ones, requiring greater support levels per capita. As societies increase in complexity, more networks are created among individuals, more hierarchical controls are created to regulate these networks, more information is processed, there is more centralization of information flow, and there is an increasing need to support specialists not directly involved in resource production. All of this complexity is dependent upon energy flow at a scale vastly greater than that characterizing small groups of self-sufficient foragers or agriculturalists. The result is that as a society evolves toward greater complexity, the support costs levied on each individual will also rise, so that the population as a whole must allocate increasing portions of its energy budget to maintaining organizational institutions. This is an immutable fact of societal evolution, and is not mitigated by the type of energy source.

As an example, what do I use AI for (I'm sorry)? When I copy-paste these quotes in the formatting is wonky, something which would take five minutes and a cornflake's worth of energy for me to fix myself. Instead I ask ChatGPT to do it because I'm lazy. I am just an average poet, not some new category of human, and I require massive neural networks to just remember stuff, something the ancients could do naturally and much better! As Socrates said when literacy was the hot new technology:

The story goes that Thamus said much to Theuth, both for and against each art, which it would take too long to repeat. But when they came to writing, Theuth said: “O King, here is something that, once learned, will make the Egyptians wiser and will improve their memory; I have discovered a potion for memory and for wisdom.” Thamus, however, replied: “O most expert Theuth, one man can give birth to the elements of an art, but only another can judge how they can benefit or harm those who will use them. And now, since you are the father of writing, your affection for it has made you describe its effects as the opposite of what they really are. In fact, it will introduce forgetfulness into the soul of those who learn it: they will not practice using their memory because they will put their trust in writing, which is external and depends on signs that belong to others, instead of trying to remember from the inside, completely on their own. You have not discovered a potion for remembering, but for reminding; you provide your students with the appearance of wisdom, not with its reality. Your invention will enable them to hear many things without being properly taught, and they will imagine that they have come to know much while for the most part they will know nothing. And they will be difficult to get along with, since they will merely appear to be wise instead of really being so.”

What an apt description of the ills of AI, from thousands of years ago. Socrates said, “they will imagine that they have come to know much while for the most part they will know nothing.” I have simply taken a dubious philosophical idea (writing) to its most destructive conclusion, deploying that “which is external and depends on signs that belong to others” to have shittier recall than your average village griot from yonks ago. That guy could reproduce passages from memory for a few olives and a splash of wine, whereas I require specialized memory cards and offerings of water and oil that actually belong to the future.

As Covello said in the Goldman Sachs report, “AI bulls seem to just trust that use cases will proliferate as the technology evolves. But eighteen months after the introduction of generative AI to the world, not one truly transformative—let alone cost-effective—application has been found.” Note that Goldman Sachs makes more money as AI this boom goes on, there's nobody more incentivized to hype it up, but even they're like “uh, this is bullshit.” As the paper says in the intro:

The bigger question seems to be whether power supply can keep up. GS US and European utilities analysts Carly Davenport and Alberto Gandolfi, respectively, expect the proliferation of AI technology, and the data centers necessary to feed it, to drive an increase in power demand the likes of which hasn’t been seen in a generation, which GS commodities strategist Hongcen Wei finds early evidence of in Virginia, a hotbed for US data center growth.

As we become increasingly aware of the costs of massive energy use, we are using even more massive amounts of energy to solve Sudoku puzzles to create speculative cryptocurrencies (this is literally how Bitcoin works) and even more massive amounts of energy to produce verbal diarrhea (an apt description of generative AI). This seems inane unless you consider Tainter's (disputed) thesis that all complex societies collapse this way. Because of increasing complexity for diminishing returns.

I'd like to get into this more but my children have both woken up and will soon start generating pre-industrial amounts of bullshit which is at least endearing and requires only cornflakes to fuel. So I'll expand on this simple concept of catastrophic complexity next time. Just note that this may be a while. I need a weird charger adapter here and I have my charges to worry about. My seemingly simple writing output is actually complicated, and thus prone to falling apart.